At its recent Ignite conference, Microsoft listed three new chips:

- a hardware security module (HSM),

- a data-processing unit (DPU), and

- an HBM-enabled AMD Epyc server processor.

HSM

- What is an HSM? An HSM is a digital secrets vault. Historically a system-level product (e.g., from Thales and other vendors), they’re also card-based (e.g., see the Marvell LiquidSecurity 2 adapter). They can be virtualized and appear as software services. However, they should physically be hardware compliant with Common Criteria or FIPS 140 and resistant (ideally impervious) to software-based and physical attacks.

- Why employ an HSM? Every company has secrets, such as SSL private keys, that must be securely stored. Without secure secrets, the modern internet and economy would cease. An HSM can do more than simply store secrets. It can also perform processing with them, which is more secure than transmitting them to a server processor. Thus, an HSM is both a vault and a processor. For example, an HSM can sign and validate certificates. In addition to protecting their own businesses, hyperscalers must provide corporate customers with the best security possible, and an HSM is essential to meeting this goal.

- Why make an HSM instead of buying one? There’s a good chance that Microsoft requested a customized HSM from an established vendor instead of designing its own from the ground up. Hyperscalers, such as Azure, have proprietary hardware roots of trust (HWRoT) and protocols, which require custom silicon. Moreover, they may require unusual interfaces, features, or performance.

DPU

- What is a DPU? The DPU term is nebulous but typically refers to a network-processing chip that could anchor a smart NIC—an add-in card with capabilities (including programmability) not found in a conventional network adapter. Their immediate ancestors include network processors (NPUs) and multicore communication processors (e.g., Marvell Octeon). Indeed, some of the first smart NICs employed Octeon.

- Why employ a DPU? Further up the family tree, one will find mainframe I/O processors. Like those devices, a key function of a DPU is to offload I/O from the central host processor. Hosts are expensive resources and ill suited to network processing—much like they aren’t good at 5G. A DPU can preserve host-processor resources for general computing workloads. Moreover, a DPU provides a demarcation point between computing and networking, helping hyperscalers secure their systems.

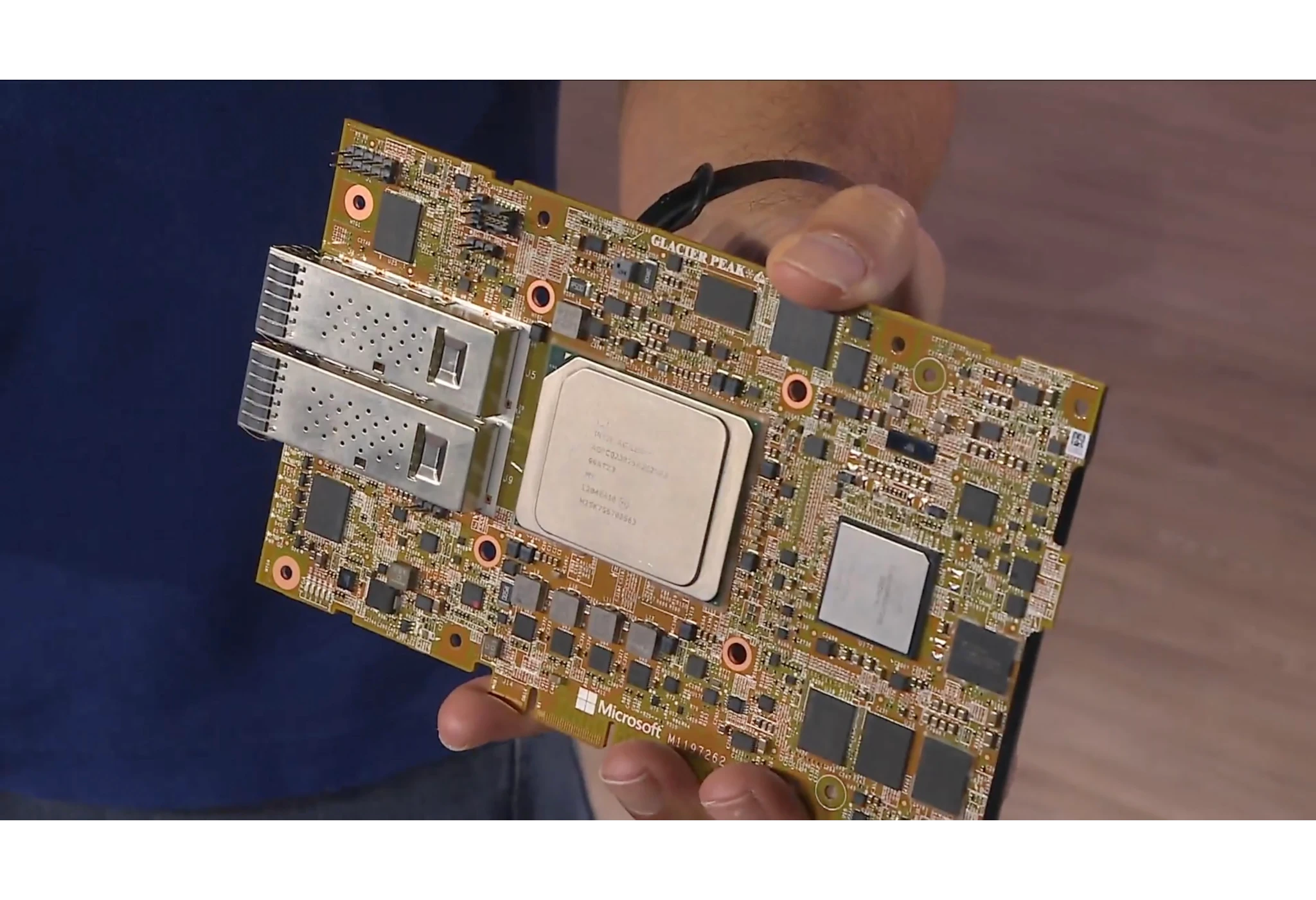

- Why make a DPU instead of buying one? Performance and scaling requirements lead hyperscalers to employ proprietary protocols, which fixed-function network controllers can’t handle. A custom design can better handle these protocols and integrate proprietary functions, such as an HWRoT as in an HSM. Microsoft has built its own smart NICs from off-the-shelf controllers, processors, and FPGAs, but a custom chip should be smaller, faster, and lower power. (This article’s hero picture above shows the company’s 2×100 Gbps Glacier Peak Azure Mana NIC based on what looks like an Intel/Altera Arria 10 FPGA and an NXP LX2162A processor.) Supplementing its FPGA designers two years ago, the company acquired Fungible, a struggling DPU startup and the coiner of the DPU term.

Epyc HBM

Whereas the HSM and DPU will operate behind the scenes and be pervasive, the Epyc HBM faces customers and is a niche product for HPC workloads. Microsoft will employ it in clusters connected by InfiniBand, Nvidia’s semistandard (effectively proprietary) network technology popular in supercomputing.

As the name implies, HBM provides higher bandwidth (throughput) than conventional DRAM. This comes at the expense of capacity, however. Microsoft will offer virtual machines with up to 9 GB per core, but average per-core capacity will be only a little more than 1 GB. Still, that’s more fast memory than the previous Vcache-enabled Epycs offered, which had 1.1 GB of L3 cache per socket. But, those also could have big conventional DRAM pools. Thus, some memory-intensive workloads will run better on one processor than another depending on their working set sizes and total footprint.

Importantly, the Epyc HBM processor provides more HBM capacity and bandwidth per socket than the Intel Xeon Max, a Sapphire Rapids processor. Although both AMD and Intel employ chiplets, AMD’s approach is more flexible. We expect the company created Epyc HBM by mating the Zen 4 compute dice from other Epycs with the I/O die and HBM stacks from the AMD MI300; i.e., the new product entailed almost no additional design time. Intel hasn’t announced a Max version of Granite Rapids. Because HBM-enabled server processors are niche products, it’s possible that in this generation, AMD will capture the entire market.

Bottom Line

Like other hyperscalers, Microsoft has specialized processor requirements and the resources to develop proprietary designs (such as a custom HSM and DPU) and scale to justify a third party modifying its standard processors (as in the Epyc HBM case). Hyperscalers’ do-it-yourself approach narrows merchant-market semiconductor opportunities but creates new ones for suppliers with custom-silicon businesses and composable products.