-

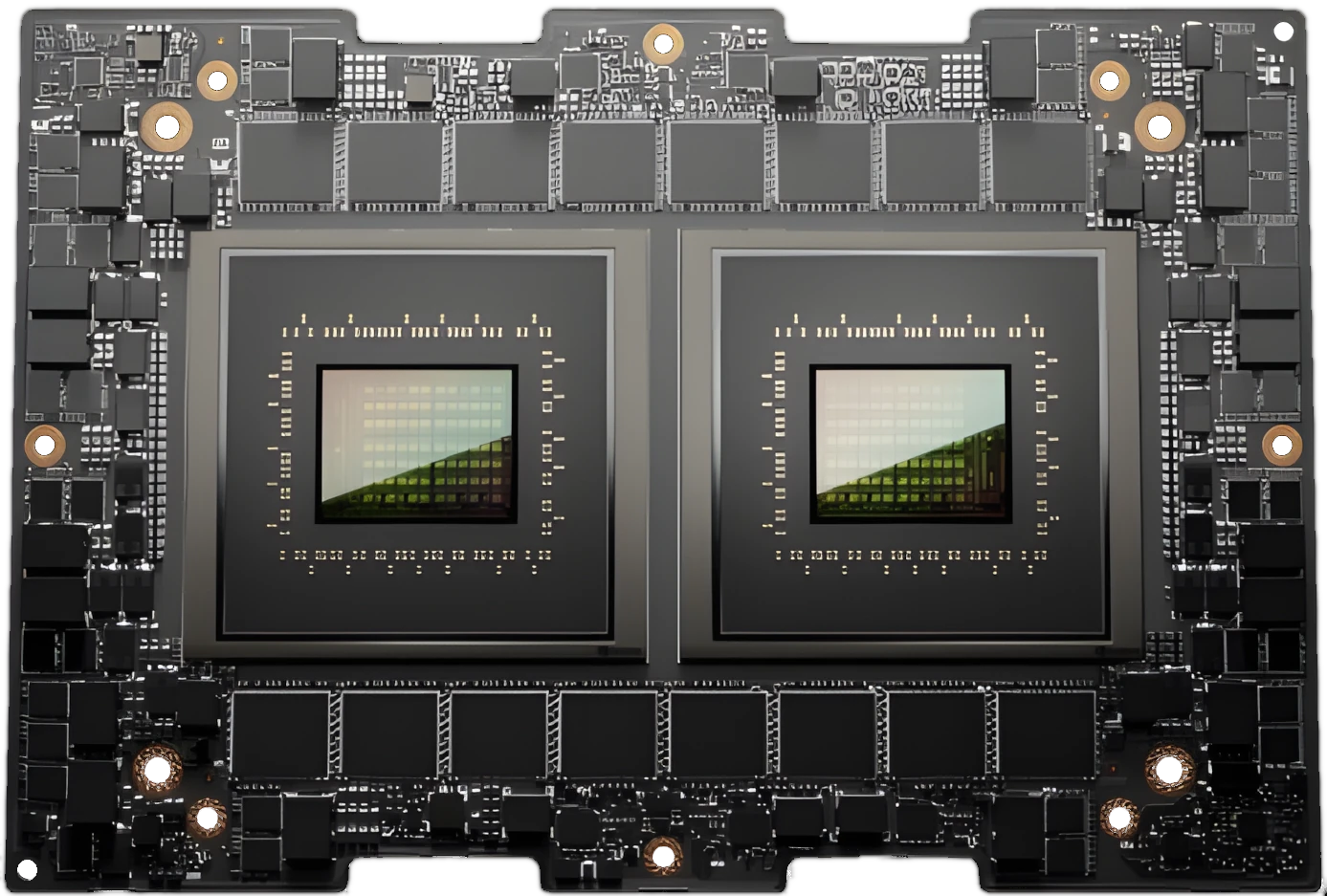

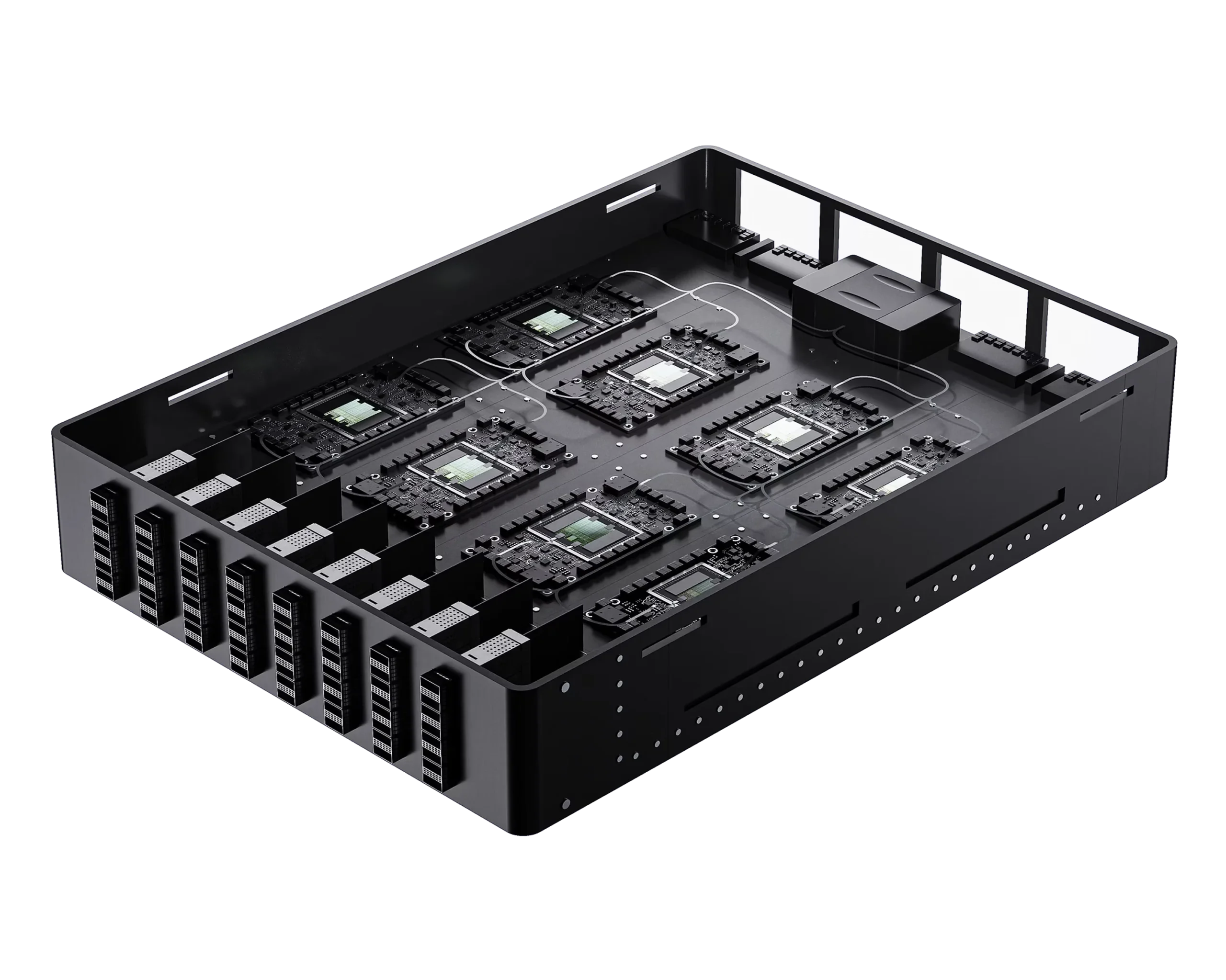

Will Tsavorite’s Composable AI Chiplets Be a GEMM Gem?

Tsavorite debuts its OPU (NPU) with $100M in orders, featuring Cuda support, Arm cores, and modular chiplet scalability. continue reading

-

Cadence ChipStack AI Super Agent Cuts Design Time

Cadence has released the ChipStack AI Super Agent, a productivity-boosting automated workflow for front-end chip design and verification. continue reading

-

Neurophos Taps Metamaterials for AI Revolution

Neurophos has raised $110 million to develop exaflop-scale photonic AI chips that use tunable metamaterials to overtop the “power wall” of traditional GPUs. continue reading

-

Microsoft Maia 200 Beats Blackwell’s Efficiency

Microsoft is deploying its second-generation AI accelerator. The new Maia 200 NPU targets inference, with raw performance similar to that of the Nvidia Blackwell but requiring much less power. Maia only employs Ethernet, compared with other accelerators that employ a different technology for local (scale-up) interconnect. Microsoft has withheld details; its statements indicate that Maia continue reading

Popular

Sponsored Content and Plugs

- Slash Server Costs Without Hardware or Software Changes

- Ceva Boosts NeuPro-M NPU Throughput and Efficiency

- MEXT Helps IT Leaders Find the Sweet Spot

- Chips Act Backs Chiplets

- Arteris Expands NoC Offerings for AI Accelerators

Other Sites You Might Like

Read More

Altera Amazon AMD Arm auto Broadcom Cerebras Ceva CPU data center DPU DSP edge AI embedded Epyc FPGA Google GPU Imagination Immortalis Intel Mali Marvell MCU MediaTek Meta Microsoft MLPerf networking NPU (AI accelerator) Nvidia NXP OpenAI PC process tech Qualcomm Renesas RISC-V SambaNova SiFive smartphone SoftBank software Tenstorrent Tesla