Month: April 2025

-

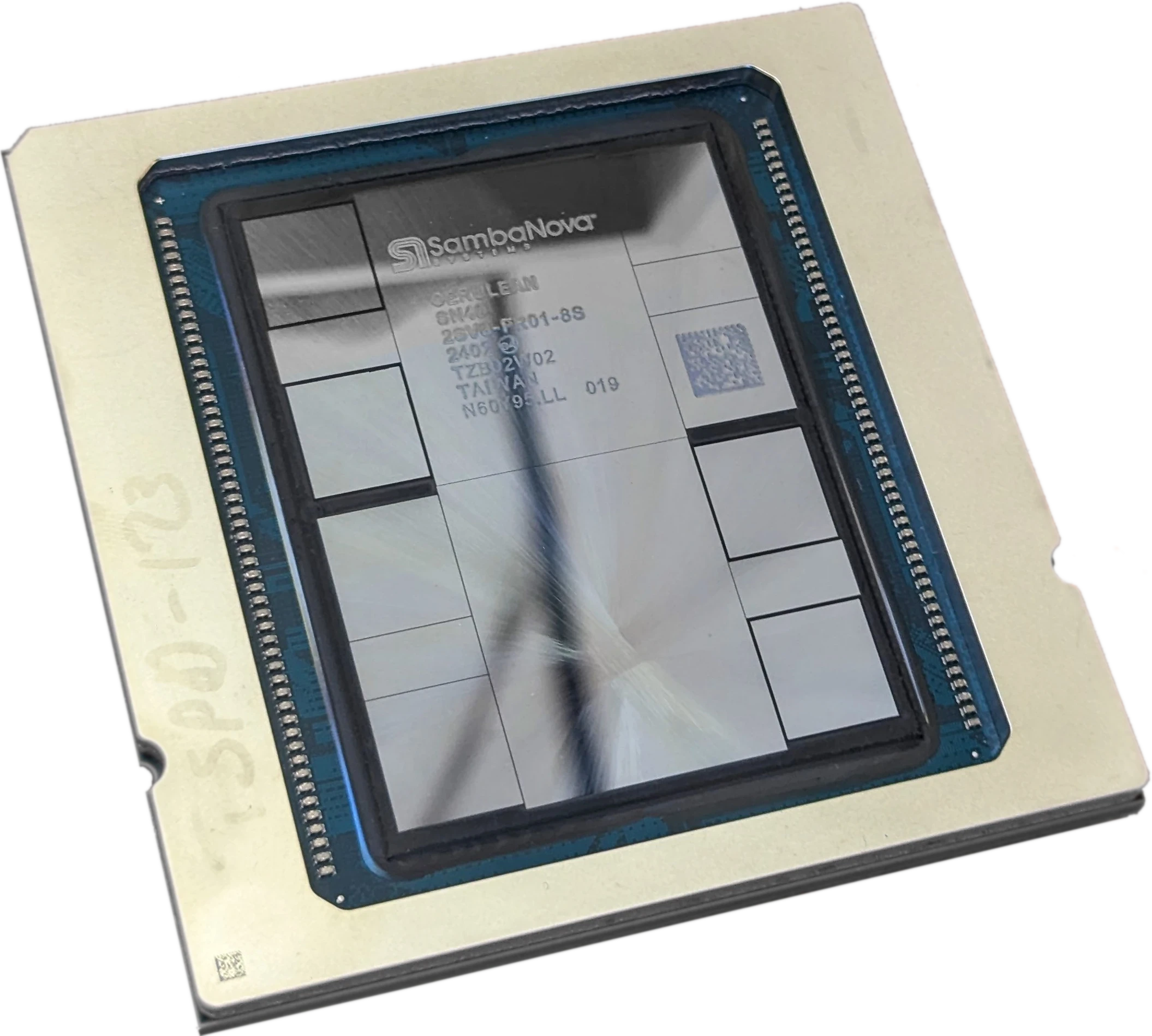

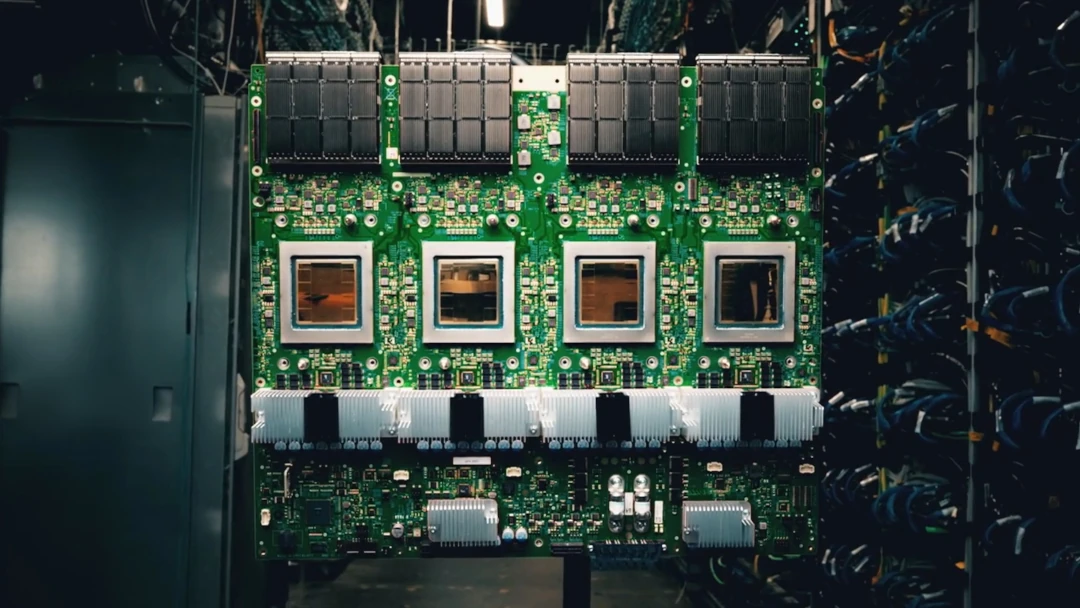

SambaNova Retreats from Training, Bets on Inference Services

SambaNova has reduced its workforce by 15% and refocused on AI inference services to navigate competition with GPU giant Nvidia. Employing CGRA and three memory tiers, its architecture on paper beats GPUs but real-world advantages are unclear.

-

Huawei’s Ascend 910C and 384-NPU CloudMatrix Fill China’s AI Void

As Huawei ramps up its AI chip efforts with the Ascend 910C NPU and CloudMatrix 384 system, it seeks to fill a void created by the banning of Nvidia GPUs. Having evolved over the past 5+ years, the Ascend 910 is competitive only in AI inference despite its original aim to tackle training.

-

MEXT Helps IT Leaders Find the Sweet Spot

MEXT software helps IT leaders find the configuration sweet spot that balances capability and cost. By preemptively loading pages from storage, it helps IT managers employ servers with less DRAM, reducing hardware costs without sacrificing performance.

-

Google Adds FP8 to Ironwood TPU; Can It Beat Blackwell?

Google’s TPU v7, Ironwood, offers 10x faster AI processing than v5p. How did Google achieve this speedup, and how does Ironwood compare with Nvidia’s Blackwell?

-

Nvidia Blackwell Shines, AMD MI325X Debuts in Latest MLPerf

MLCommons releases new MLPerf data-center inference results. Also known as The Nvidia Show, the semiannual benchmark report includes new tests in this edition. Nvidia extends its lead, AMD debuts MI325X. Explore scores, scaling, new benchmarks (Llama 3.1), and key AI hardware takeaways.

-

MediaTek Kompanio Ultra Confronts Intel for Chromebook Plus Sockets

MediaTek’s Arm-based Kompanio Ultra 910 rivals Intel’s Core Ultra 5 125U performance at lower power. What does this mean for Chromebook Plus, thin clients, and Windows on Arm?