SambaNova has trimmed staff and pivoted to AI inference as a service, according to EE Times. Founded in 2017, the company emerged in 2020 with a coarse-grained reconfigurable architecture (CGRA) that promised similar raw throughput as the contemporaneous Nvidia A100 GPU but superior real-world training and inference performance. Since then, the company has developed several versions of its NPU (which the company calls an RDU) and shown superior throughput with less hardware than competitors. However, it’s never disclosed MLPerf results, leading us to question its chips’ effectiveness.

Dancing with Services

Since launch, SambaNova has offered systems in addition to chips, setting it up to compete directly with Nvidia and its DGX and other systems. SambaNova also has provided cloud services, lowering the barriers for customers to try out its technology. Employing services, enterprises can avoid large capital expenditures for hardware from an unfamiliar vendor and can experiment with models such as DeepSeek, Llama, and Qwen without porting them to the hardware or obtaining them from Hugging Face. Standard APIs allow enterprises to integrate AI functions into their applications. SambaNova’s initiatives include projects to deliver cloud services in the Kingdom of Saudi Arabia, Singapore, and Japan.

In addition to lowering entry barriers, cloud services may be easier for the company to support than systems and far less than chips. Developer tools can be less polished, and application engineers are unnecessary. Backing away from training also obviates a raft of software and associated resources. The EE Times article states that the company is laying off 15% of its workforce.

Beating GPUs with CGRA

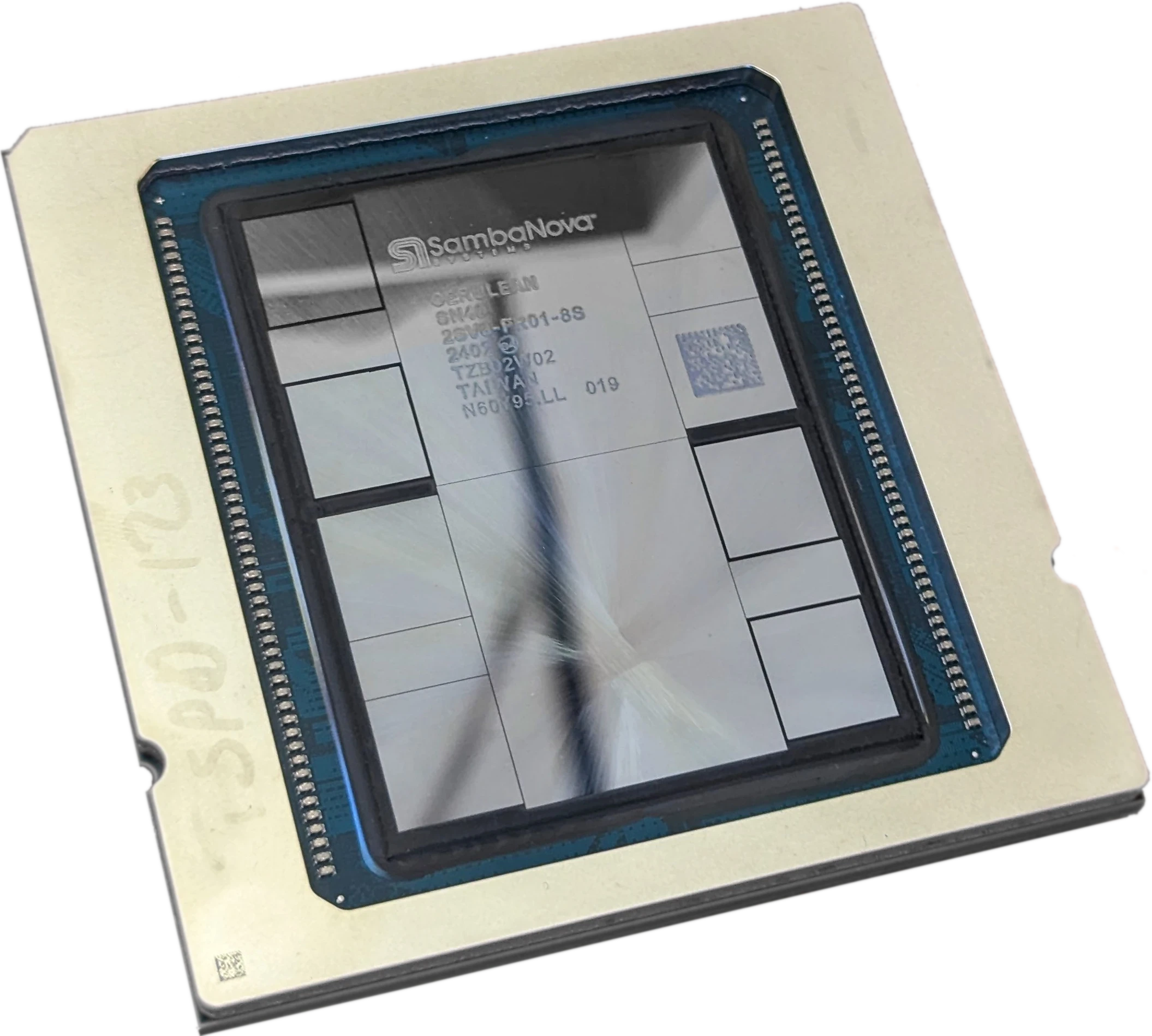

The SambaNova CGRA architecture is unusual. It arrays more than a thousand switch-connected memory and computing units. The company’s compiler maps neural networks to the array, setting the switches so that data flows through the chip. The approach can eliminate roundtripping data between processing and centralized memory. It’s effective for pipelining operations and parallel execution.

Off-chip interfaces enable ganging together multiple NPUs, the most recent being the SambaNova SN40L. In addition to the large integrated memory distributed among the memory units, the SN40L incorporates HBM. Moreover, unlike many NPUs/GPUs, it also interfaces with standard DRAM. Copious RAM enables the chip to run large models without scaling out simply to increase memory capacity, improving cost effectiveness and power efficiency.

Bottom Line

Like other NPU startups, SambaNova eschewed a GPU architecture. As much as those have evolved, inefficiencies linger. In theory, a GPU can’t match the alternatives’ performance, power, and cost on AI workloads. In practice, however, GPUs have been more than adequate, and their momentum unslowed. Alternative architectures have only succeeded in hyperscaler-proprietary deployments such as the Google TPU and Amazon Trainium. Focusing on inference services, SambaNova seeks a place among them instead of as a direct threat to Nvidia’s seemingly unstoppable hegemony.