Last month, SiFive delivered a second generation of RISC-V cores integrating vector and matrix units. The revised Intelligence family supports recent RISC-V standards, adds an I/O interface, and upgrades the vector units, enabling the cores to better address AI and encryption workloads as either an accelerator or a standalone CPU. The company also extended the family by adding the low-cost X100, which includes a 32-bit option for its scalar core, as well as the default 64 bits of other Intelligence models.

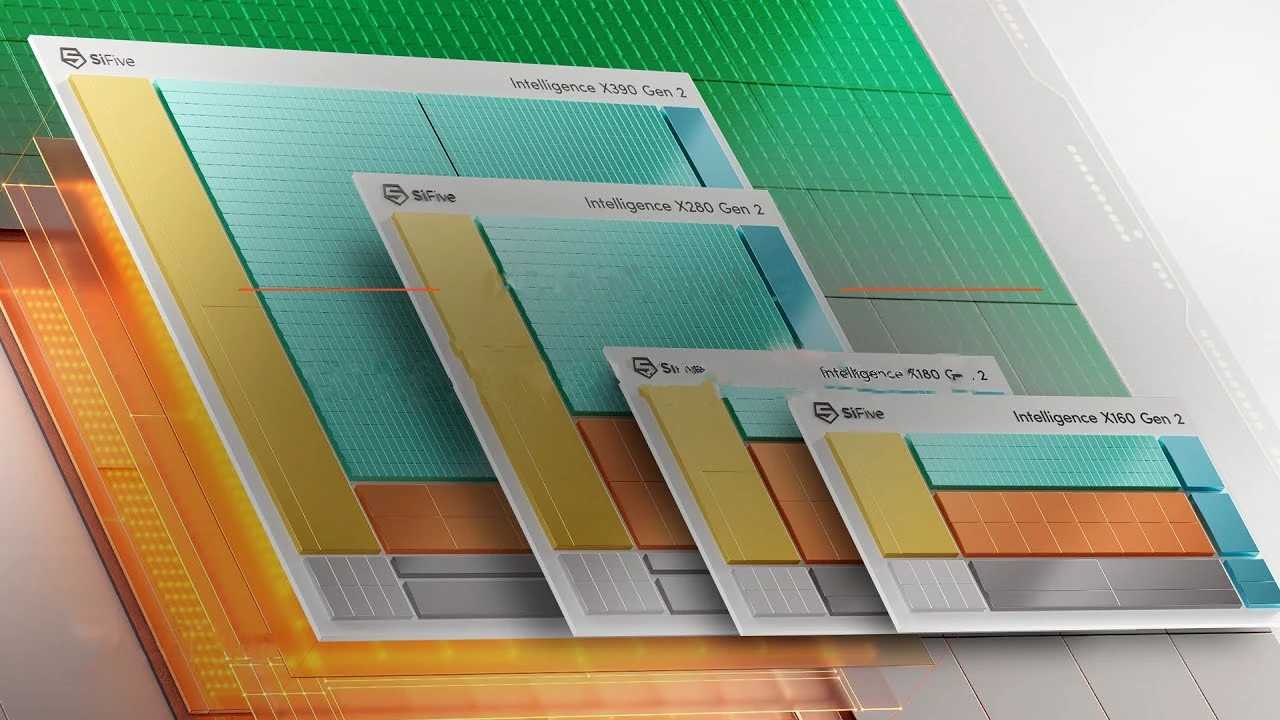

SiFive offers CPU families targeting different roles, such as Essential for control and Performance for application processing. Designated by an X in models’ names, Intelligence targets math processing, such as AI applications. All Intelligence family members integrate vector units, and the XM models add the company’s matrix engine. Vector width distinguishes the 100, 200, and 300 series CPUs, as Table 1 shows.

VLEN |

DLEN |

|

|---|---|---|

X100 |

128 bits |

64 bits |

X200 |

512 bits |

256 bits |

X300 |

1,024 bits |

512 bits |

The RISC-V architecture accommodates vector units sized to address differing performance, power, and area goals, defining parameters to express a unit’s configuration. Among these are VLEN and DLEN, which describe register and data-path width, respectively. Because the Intelligence family defines VLEN to be twice DLEN, a vector operation requires two cycles to complete. The data path operates on vectors’ top halves in one cycle and the bottom halves in another.

The smaller X100 suits tasks such as detecting acoustic events, while the X200 can perform object recognition. To quadruple the peak throughput of the X200, the X300 series has two vector units, each twice as wide as the X200’s. Supporting multicore configurations and a matrix engine, the X300 series can address real-time scene detection, such as for AR glasses.

New Intelligence Features

To improve vector performance, the second-generation family decouples the vector unit from the rest of the core. It has command and data queues to buffer operations and data. SiFive allows licensees to choose the data queue depth depending on their memory latencies. Separate load and store data paths increase aggregate throughput between the memory hierarchy and the vector unit.

To improve the memory hierarchy, the Intelligence family has reduced the number of cache levels. The cores’ private L2 caches are eliminated, while the shared last level (previously the L3, now the L2 with the removal of the private L2) is preserved. Area, therefore, decreases, and SiFive reports utilization increases.

As we’ve noted in our coverage of other products, such as the Nvidia Rubin CPX, softmax can be an AI-processing bottleneck. To alleviate this, SiFive offers an optional exponentiation unit and an associated custom RISC-V vector instruction, which can speed up some AI kernels by 80%. Other upgrades include native BF16 support (a popular 16-bit floating-point format for AI processing), encryption instructions, and the ability to convert 4-bit and 8-bit floating-point data. Thus, an Intelligence-based design reaps the reduced memory usage of FP4 and FP8 even if there’s no computational speedup.

Except for the 32-bit X160 configuration, the Intelligence family shares a 64-bit integer-core microarchitecture. It’s an eight-stage, dual-issue, in-order design compliant with the RVA23 RISC-V profile, which has become the de facto standard. An MMU is optional, consistent with the cores’ mission spanning both standalone and accelerator roles.

A core can also control accelerators attached to it. For this application, the second-gen Intelligence family has two interfaces. The scalar coprocessor interface (SSCI) provides custom logic with a direct link to scalar registers under control of licensee-defined instructions. The new vector coprocessor interface (VCIX) serves a similar role, enabling access to vector registers. A separate interface, the core local port (CLP), allows the CPU to access the custom accelerator’s local memory.

Competition

Andes

Andes is SiFive’s main competitor for vector RISC-V cores, licensing a design with a similar scalar core supplemented by vector and matrix extensions. SiFive’s Intelligence family and the Andes AX46MPV implement similar features. Differences include profile compliance, matrix and vector units, and memory hierarchies.

Andes advertises complying with the older RVA22 profile, which is almost the same as the preferred RVA23. RISC-V has yet to standardize matrix extensions, leading both vendors to provide proprietary implementations. Andes offers an eight-bit integer (INT8) matrix-multiplication block built into the core, whereas SiFive has a larger matrix unit shareable by multiple CPUs that supports BF16 in addition to INT8. Licensees seeking to offload light AI workloads will find the Andes solution sufficient; SiFive’s is better for those building a fully-fledged accelerator (NPU) or requiring more throughput.

Regarding vector processing, instead of offering several named models differing in vector-unit size, Andes supplies a single model with configurable vector units and 32-bit/64-bit scalar-core options. Whereas the SiFive X390 has two 1,024-bit (VLEN) vector units, the AX46MPV has a single unit configurable up to 2,048 bits, yielding the same raw throughput. Regarding the memory hierarchy, Andes offers a tightly coupled vector memory; by contrast, the Intelligence family can simultaneously load/store vectors from/to the L2 cache shared among cores. Andes provides an optional private L2 cache and a shared last-level cache—like earlier Intelligence models.

Unless matrix-math acceleration is a primary concern, licensees will choose one vendor’s offering based on factors such as vendor trust, as well as power, performance, and area (PPA).

Other Alternatives

Potential licensees may also consider Tensilica cores from Cadence and vector DSPs from Ceva. Neither is RISC-V compatible, eliminating them from consideration if that’s a requirement. Cadence offers various standard configurations, but a comparable vector processor requires licensees to employ Tensilica’s customization tools. For scalar operations, the Tensilica design is only 32 bits, a further limitation. Ceva has various DSPs, optionally integrating additional functions for AI and wireless processing. Thus, its offerings are better for designers seeking either a DSP programming model or, at the other extreme, a complete subsystem.

Bottom Line

SiFive is a RISC-V innovator with a broad CPU portfolio and novel extensions, such as for matrix processing. The company is revisiting its past designs, revamping them to address customer requirements. The new Intelligence family is a broader product line than its predecessor, and it improves PPA and customization. Developers who selected a competitor to the original Intelligence CPUs will find the second generation improved. New developers will find the Intelligence family flexible and scalable, whether they’re designing an NPU or an SoC capable of AI or signal processing.