Year: 2025

-

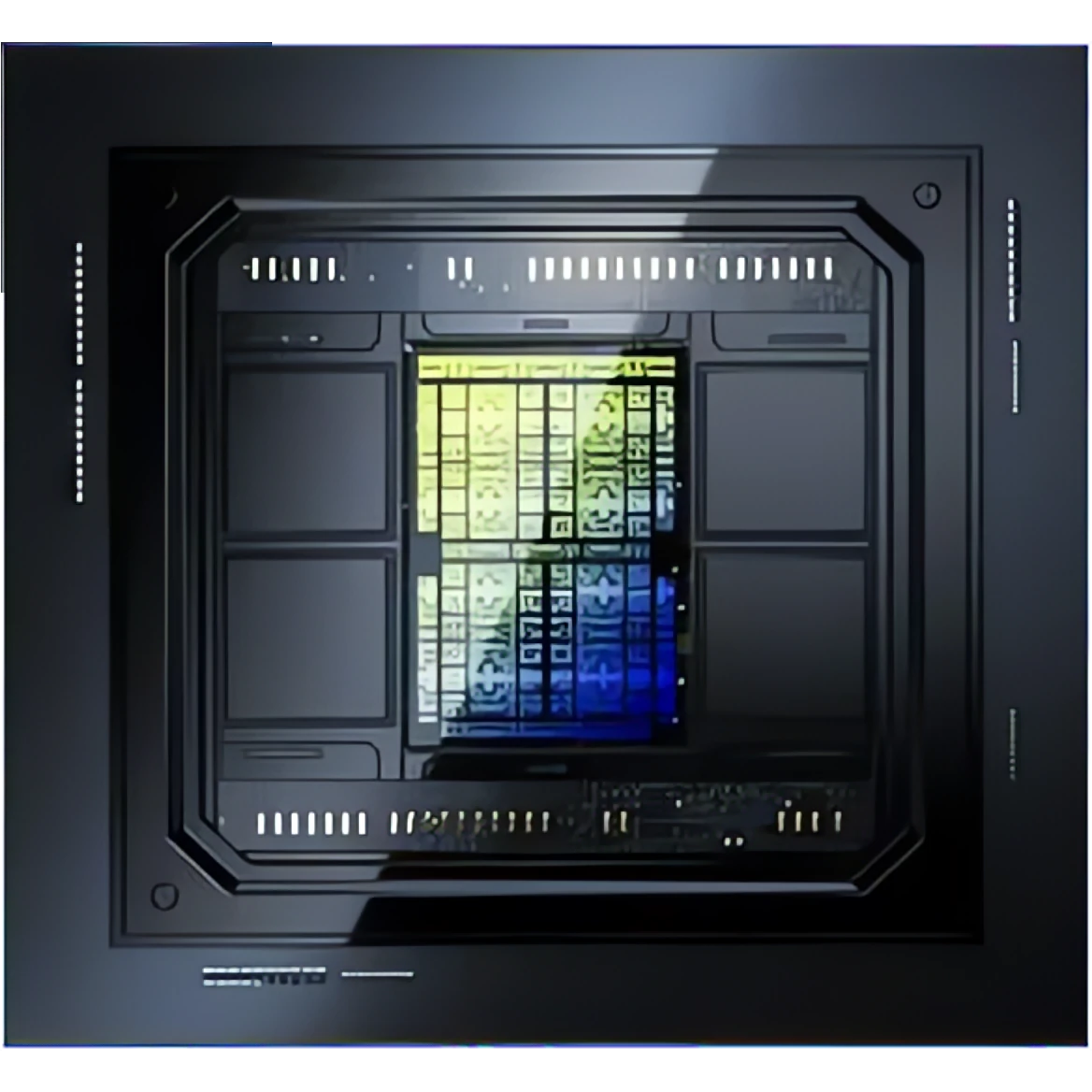

Intel Details 18A Panther Lake & Clearwater Forest Chips

Intel has revealed architectural updates for its 18A-based PC (Panther Lake) and server (Clearwater Forest) processors, the first chips on the new node. The 18A process features Ribbon FET (GAA) transistors and Power Via backside power delivery. Clearwater Forest uses 12 computing tiles with Darkmont E-cores; it and Panther Lake update their E-cores for better…

-

Tenstorrent Licenses Ascalon RISC-V CPU and Tensix Neo NPU IP

Tenstorrent now broadly licenses its CPU and NPU cores. The Ascalon X delivers IPC approaching Arm’s fastest CPUs, and the Tensix Neo is a scalable NPU block. The company also provides supporting design resources.

-

Byrne-Wheeler Report, Episode 2

I’ve suspended my solo YouTube channel to focus on the Byrne-Wheeler report. In this, our second pilot episode, we discuss the Nvidia-Intel and the rumored Nvidia-Enfabrica deals, Upscale emerging from stealth, and the MediaTek and Qualcomm smartphone chips.

-

AMD Inks OpenAI Deal

OpenAI has agreed to buy AMD AI accelerators; in exchange, AMD grants it warrants for about 10% of the company. Somehow, this will be accretive to AMD. Spanning five years, the deal calls for OpenAI to purchase 6 gigawatts of AMD-based hardware. The first 1 GW tranche will deploy in the second half of next…

-

Never Mind the Agentic Bollocks, Here’s the Snapdragon

Revised CPUs, a bigger NPU, and improved multimedia functions mark Qualcomm’s Snapdragon 8 Elite Gen 5 smartphone processor. The same CPUs propel the Snapdragon X2 PC processor to 5 GHz.

-

Log-Computing Pioneer Recogni Rebrands as Tensordyne

The newly renamed Tensordyne is shifting its focus from automotive to generative-AI systems differentiated by its power-efficient logarithmic number system.