AMD has launched the Instinct MI350 AI accelerator (GPU), but next year’s MI400, teased at today’s pep rally, is bigger news. Available in two versions, the MI350 delivers similar raw performance to Nvidia Blackwell, which is already in deployment. The next AI chip also promises similar floating-point throughput to its rival’s contemporaneous offering but 1.5× the memory capacity and bandwidth. Acknowledging that it’s playing in Nvidia’s world, AMD described itself as a “critical high-performance alternative” during the MI350 launch event.

AMD Instinct MI350 Details

- The MI350 family comprises the MI350X and MI355X. AMD acknowledges they’re the same silicon but qualifies the latter to run at higher power. (Unless specified, performance discussed here is for the faster MI355X.)

- Performance leaps 35× over the AMD MI300X, but this compares the new chip employing FP4 data with the old one employing FP8. Moreover, the gain is only on the Llama 3.1 405B model configured for ultra-low latency inference.

- FP4 throughput peaks at 5 PFLOPS (no sparsity) compared with 2.6 FP8 PFLOPS for the MI300X, accounting for part of the 35× speedup. By comparison, Blackwell B200 delivers 10 FP4 PFLOPS.

- A 50% larger HBM capacity enables a single GPU to contain the whole model, which we believe accounts for the remaining speedup. (Note that the MI325X adds memory to the MI300X, which could be why AMD doesn’t compare the MI355x to it.)

- In other Llama 3.1 405B cases, AMD claims a 2.8× to 4.2× improvement over the MI300, driven, we expect, by the relative FP4 over FP8 speedup and putting AMD’s new chip ahead of the B200 on DeepSeek R1 and Llama 3.1 405B inferencing—a gain consistent with its greater power (1.4 kW).

- AMD stated it delivers 40% more tokens per dollar than Nvidia, obliquely conceding it discounts Instinct.

- Maximum FP16 and FP8 performance matches the B200 at 2.5 PFLOPS and 5 PFLOPS, respectively.

- AMD slashed FP64 peak throughput as Nvidia did with the new B300, indicating that a single product can no longer serve the AI and HPC markets.

- The MI350 family is already shipping, and the first systems and cloud instances should be available next quarter.

Other MI350 Launch Tidbits

- AMD first discussed training well into the Instinct MI350 launch event. The company cited MLPerf results but still only for a Llama 70B model.

- Software is one factor hindering AMD’s training performance, and AMD today launched Version 7 of its ROCm stack, reporting 3.5× faster training than with ROCm 6.

- Interconnect is another factor. To scale up (i.e., for intrarack connections), AMD tunnels UALink over Ethernet. We’re concerned the added protocol overhead saps performance compared with Nvidia’s NVLink (or native UALink). Moreover, AMD can’t point to large-scale clusters like Nvidia can.

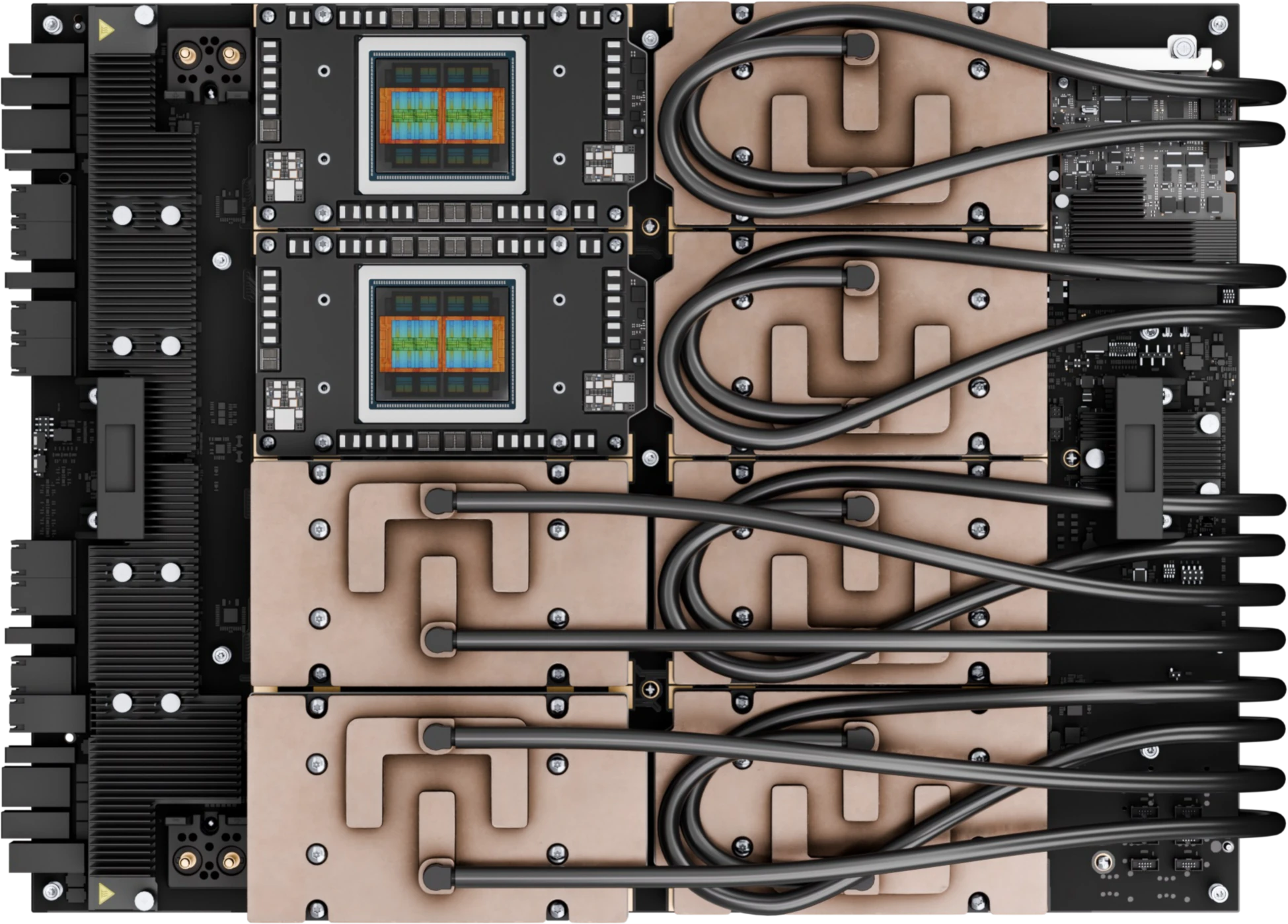

- Nonetheless, AMD touted a 128-GPU rack employing liquid cooling.

Ringing Endorsements

- AMD’s 50-company logo wall is an impressive proof point, and the company reports

4 out of 5 dentists7 of the 10 largest AI businesses use Instinct accelerators. That said, the scale of these companies’ deployments is questionable given Nvidia’s commanding market share. - Nonetheless, launch-event speakers endorsing AMD include representatives from one of AMD’s biggest AI-accelerator customers (Meta) and OpenAI.

- Sam Altman appeared on stage with AMD CEO Lisa Su at the end of the presentation, as iconic a guest star as an AI show can muster.

- Altman stated OpenAI is already running some workloads on the MI300X.

- Also, OpenAI is “extremely excited for the MI450,” and the specs initially sounded “totally crazy.” (The MI450 is an initial MI400-series SKU.)

- Astera’s CEO appeared on stage to endorse UALink and promise related I/O components (switches, signal conditioners, and controllers) for MI400-based systems and any others using UALink. Of those components, switches are the most intriguing to us as they could obviate tunneling the protocol over Ethernet.

AMD Instinct, Epyc, and Pensando Roadmaps

- Already in AMD’s lab, the MI400 doubles the MI350’s FP8 and FP4 peak throughput, doubles scale-up bandwidth, more than doubles memory bandwidth, and increases memory capacity 1.5×.

- The MI400 will be 10× faster than the MI355, but, as with the 35× gain discussed above, this speedup won’t be generalizable.

- In 2026, AMD expects to deliver the MI400 in a rack-scale system, much as Nvidia has incorporated Blackwell into the NVL72 and as Intel is promising to do with its allegedly forthcoming Jaguar Shores GPU.

- The MI400 rack, called Helios, matches the forthcoming Nvidia Vera Rubin (Oberon) system in most regards but promises 1.5× the memory capacity and bandwidth.

- AMD intends to follow this design in 2027 with an MI500-based rack that also employs the Epyc Verano server processor, the successor to the Zen 6 Epyc Venice that Helios employs. If Verano is a Zen 7 chip, AMD is doubling its CPU-design cadence.

- Venice will be one of the first chips that TSMC fabs in its 2 nm process and sports up to 256 CPUs.

- Both Helios and the next-gen MI500 system will also incorporate a new Pensando DPU called Vulcano, the follow-on to Salina.

Bottom Line

Inference deployments are growing fast, accounting for a greater share of AI-accelerator sales. It’s good news for AMD and competing NPU vendors because all have struggled to capture share from the mighty Nvidia, particularly for training, where Team Green’s software is far ahead. Nonetheless, AMD is investing across the board, including in technologies to improve Instinct’s applicability to training.

The company is playing catch-up. However, on a per-GPU basis, the MI400 may be on par with Nvidia’s offering (Vera) or even ahead, owing to its memory subsystem. A system solution has become important, but AMD must also show scaling out to thousands of racks, a feat enabled by improved interconnect and software. As for 2025, the MI350’s generational gains are impressive but only keep AMD competitive.