Nvidia has disclosed Rubin CPX, a data-center AI chip that the company’s public roadmaps haven’t previously included. Due by the end of 2026, the CPX arrives after Rubin proper and before Rubin Ultra. Instead of replacing these chips, Rubin CPX complements them by offloading some processing.

Rubin CPX is a monolithic chip that employs GDDR7 external DRAM, unlike Blackwell, Rubin, and Rubin Ultra, which integrate two computing dice and eight HBM stacks. The CPX, therefore, doesn’t use TSMC’s Cowos packaging technology. The CPX has four video encoders and decoders to accelerate video analysis and generation. To accelerate LLM’s attention function, the CPX integrates new hardware. Nvidia says the CPX triples the exponentiation throughput of the GB300, the newest Grace Blackwell module.

Disaggregating Prefill and Decode Stages

AI-services companies have been disaggregating their large language models (LLMs), dividing the inference pipeline among multiple GPUs/NPUs. One such division is between the prefill stage and the decode stage. Prefilling is computationally intense and governs the time to first token.

Decoding generates tokens and determines the token-per-second rate. It’s less computationally intensive than prefilling but requires relatively more memory capacity and bandwidth. Decoding entails the attention function, which includes softmax operations.

Softmax involves exponentiation steps. These are often performed at higher precision than the matrix multiplication used in other LLM computations, such as FP32 instead of BF16. Vector units, not matrix engines, handle softmax, which limits throughput. Moreover, exponentiation can be a slow operation without specialized circuits. We infer that the CPX integrates hardware specifically for exponentiation to improve softmax throughput and, therefore, decoding.

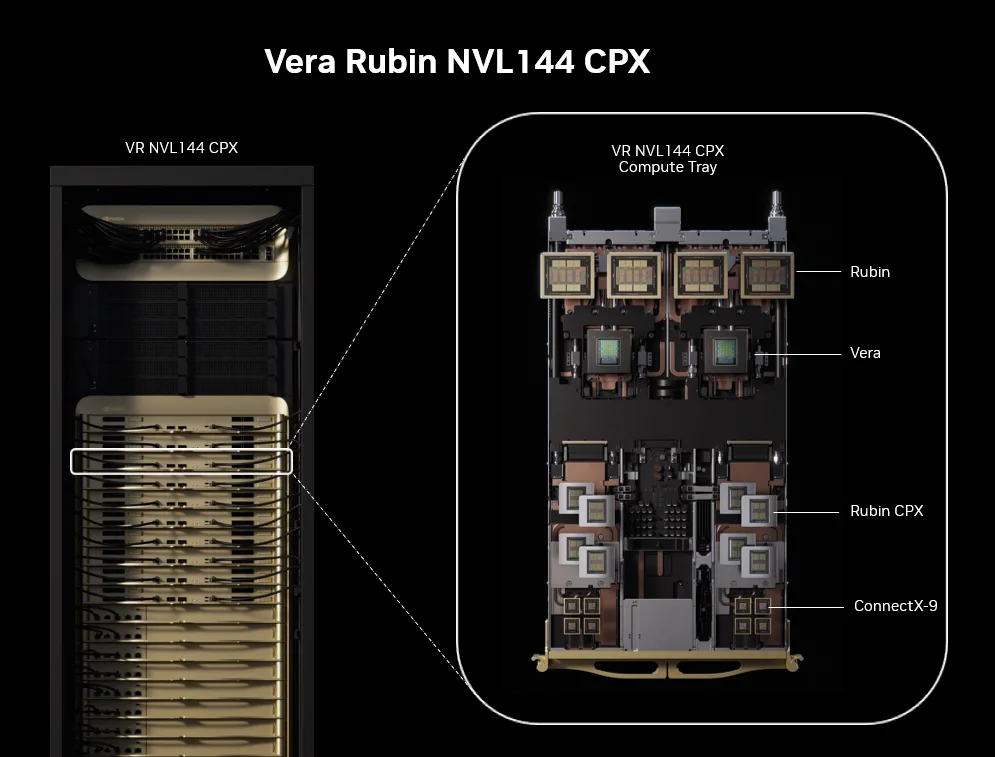

Nvidia proposes racks that disaggregate the LLM-inference pipeline, dividing execution between Rubin and Rubin CPX. Rubin executes the prefill and prior stages, and Rubin CPX performs the decoding and subsequent stages. Non-CPX and CPX racks can be adjacent to each other. A CPX tray has two Arm-compatible Vera processors, eight ConnectX-9 network controllers (DPUs), and eight CPX chips. The CPX chip has PCIe interfaces but not the speedy NVLink, indicating the KV data from the prefill stage must traverse PCIe by way of the Vera hosts to get into the CPX’s memory.

Analysis

Nvidia has offered PCI-based accelerators such as the L40S. They use the same silicon as Nvidia’s PC/workstation GPUs but target accelerated computing. Nvidia may be taking the same approach with the Rubin CPX, adapting a PC/workstation design enhanced to raise exponentiation throughput. Clues include the PCIe interface, video coders, GDDR7 memory, and circuits seen in the company’s rendering of the chip.

We accept the argument that LLM inference can be disaggregated and that different AI processors are better suited to each stage. However, other factors could be afoot. Nvidia is likely to be constrained by the supply of Cowos, HBM, and possibly leading-edge silicon. The smaller, monolithic, and conventionally-packaged Rubin CPX can address some of the demand for computing resources that Rubin would otherwise satisfy.

Bottom Line

Rubin CPX introduces a different computing element into an otherwise homogeneous data center, but we see that as only an inconvenience. Nvidia’s motivations for developing it could be self serving as much as they address customer needs. If it proves popular, we expect Nvidia to make enhancements. It could add NVlink to the chip or modify the tray design to use the company’s newly acquired Enfabrica technology to directly fill memory from the network. Rubin CPX should be good for both Nvidia and its customers, enabling the company to deliver more computing capacity and thereby generate more revenue.

Image source: Nvidia.