In light of rising DRAM prices, we’re updating this article from June. As of late 2025, server DRAM prices are surging. Memory makers are prioritizing higher-margin HBM for AI accelerators over DDR5. Consequently, DDR5 DIMMs are perhaps the largest and fastest-rising component costs of a server. At the same time, OEMs are passing these cost increases directly through to customers, raising overall server prices. Industry analysts and suppliers now broadly expect memory pricing pressure to persist. Micron states that shortages won’t improve before 2028, making DRAM over-provisioning increasingly unsustainable.

Have you noticed how expensive servers are? It’s easy to point a finger at processor prices. More than one-third of Intel’s Granite Rapids (Xeon 6) models list for over $5,000, and the company prices many of those above $10,000. Memory, however, is a bigger culprit; a year ago, DRAM could cost twice as much as a processor. Since then, memory prices have climbed 60%, according to Reuters. Worse, even a heavily loaded server might leave much of that memory unutilized. For example, University of Michigan and Meta researchers found that within a two-minute interval, only 20% of memory is hot for a data warehouse. Fortunately, memory costs can be reduced.

Optimize DRAM Utilization

So how do we improve DRAM utilization? One approach is to kick out cold pages loitering in DRAM. The brute-force approach of reducing memory capacity would increase utilization but kill performance. When usage spikes, memory must be available; otherwise, execution stops while the OS swaps pages. Anybody who has used a PC with insufficient memory will recall the machine’s insufferable sluggishness at critical times. An IT leader, therefore, sizes a server’s DRAM based not on average utilization but on a balance of cost and performance to hit a sweet spot.

Instead of focusing narrowly on a single system’s DRAM size and upsizing it until the cost becomes too painful, are there alternative approaches that adapt capacity and thereby improve utilization? In theory, memory pooling could help. Two systems with half of their memory idle could share DRAM, increasing its utilization through statistical multiplexing. The IT leader’s tasks, then, are to assess applications’ erlangs, size memory, and hope contention is rare enough to be tolerable. Even when it works well, pooled memory also requires more access time, hurting performance.

Reduce Reliance on Expensive DRAM

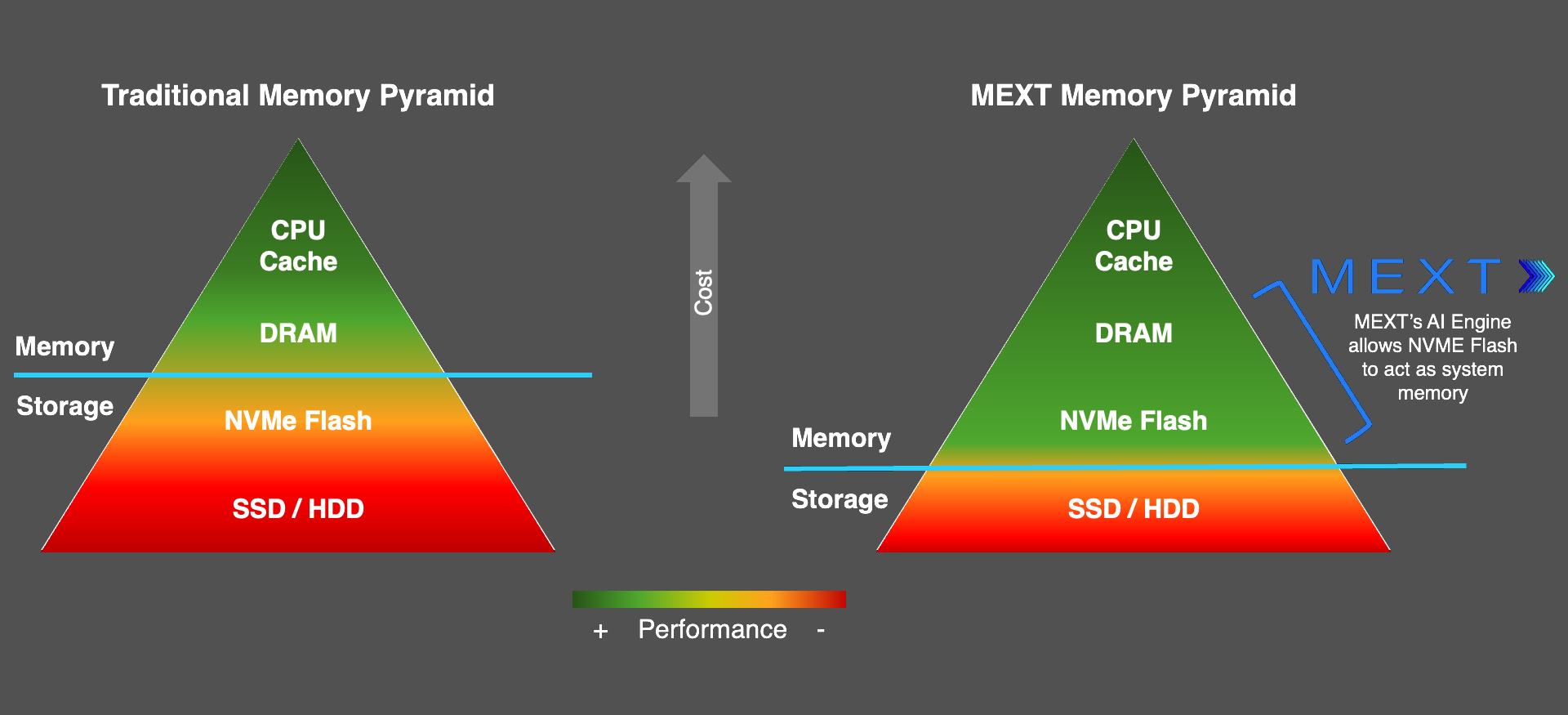

A better solution is to adapt memory tiering but in a way that reduces servers’ DRAM and, thereby, costs. Solid-state storage, typically connected via an NVMe interface, is much cheaper per bit than DRAM. At the start of 2026, one dollar buys 50× as much flash as DRAM. However, while employing it instead of DRAM reduces cost, it introduces performance-sapping latency if no further changes are made. Simply paging between DRAM and nonvolatile mass storage is the unacceptable status quo. IT teams need a new memory tier: one that delivers DRAM’s low latency and flash’s cost efficiency.

Avoid Changing Hardware or Software

In pursuit of reducing costs, it is important to try to avoid changes to hardware or software. Changes increase costs and slow adoption, as Intel’s now-canceled Optane DIMMs demonstrated. On the hardware side, processors and motherboards had to accommodate the technology, and customers had to purchase special memory modules. For Intel, the biggest processor vendor and Optane supplier, this was no problem. For everyone else, it was a hassle and expense.

On the software side, an IT manager had to deal with the complexity of choosing one of multiple Optane modes. One was transparent to applications but involved caching Optane accesses in DRAM to maintain processing speed, necessitating OS support and causing inconsistent performance. The other modes entailed application modifications, which introduced other headaches.

IT leaders want to reduce their costs but are risk averse. They will pay more if they can use proven components. Therefore, the ideal approach employs standard hardware—processors, memory modules, and flash drives. It also doesn’t require kernel or application modification, necessitating at most a driver and unobtrusive user-space software.

MEXT Uses AI to Reduce Server Costs

Startup MEXT offers AI-powered memory management that improves memory utilization and reduces expensive DRAM while avoiding hardware changes and software modifications. Its software enables flash to function as main memory, virtually transforming it from a pure mass-storage technology to system memory. At the same time, it doesn’t require kernel or application modifications.

MEXT’s technology continually monitors a system, figuring out which memory pages are hot or cold. More importantly, it predicts which cold pages are about to heat up. Using this analysis, it transparently offloads the cold ones to a conventional NVMe-connected solid-state drive (SSD). And, unlike traditional paging schemes, its AI engine predictively promotes pages from the flash drive up to DRAM before the CPU requests them. In effect, MEXT, through software, establishes a new memory tier that is far cheaper than DRAM yet far lower in latency than flash-based mass storage.

Bottom Line

Application execution, therefore, proceeds as if all memory pages are in DRAM, even when a server has less DRAM installed to reduce costs. Performance is nearly unchanged, while capex has decreased. An added benefit of avoiding special hardware is that an enterprise can employ the MEXT technology on premises-based systems as well as in the cloud, enabling it to rent cheaper instances, achieving up to 50% lower operational costs, according to MEXT’s estimates. Moreover, the technology is CPU-independent, working with Intel Xeon, AMD Epyc, and Arm-based chips. It works in virtual machines, in containers, and on bare metal. With MEXT, IT leaders can lower server costs without compromising application performance.

MEXT sponsored this post. For more information about the company and its products, go to www.mext.ai.