-

Google TPU to Host OpenAI Models

OpenAI is not deploying inference services on Google TPUs, according to Reuters. The news service previously reported that the ChatGPT developer had agreed to use Google Cloud. Subsequently various free-to-read sites parroted The Information, saying OpenAI would employ the TPU. Running OpenAI services on the TPU would reduce its reliance on Nvidia GPUs hosted by… continue reading

-

Slash Server Costs Without Hardware or Software Changes

Have you noticed how expensive servers are? It’s easy to point a finger at processor prices. More than one-third of Intel’s Granite Rapids (Xeon 6) models list for over $5,000, and the company prices many of those above $10,000. Memory, however, is a bigger culprit; DRAM can cost twice as much as a processor. Worse,… continue reading

-

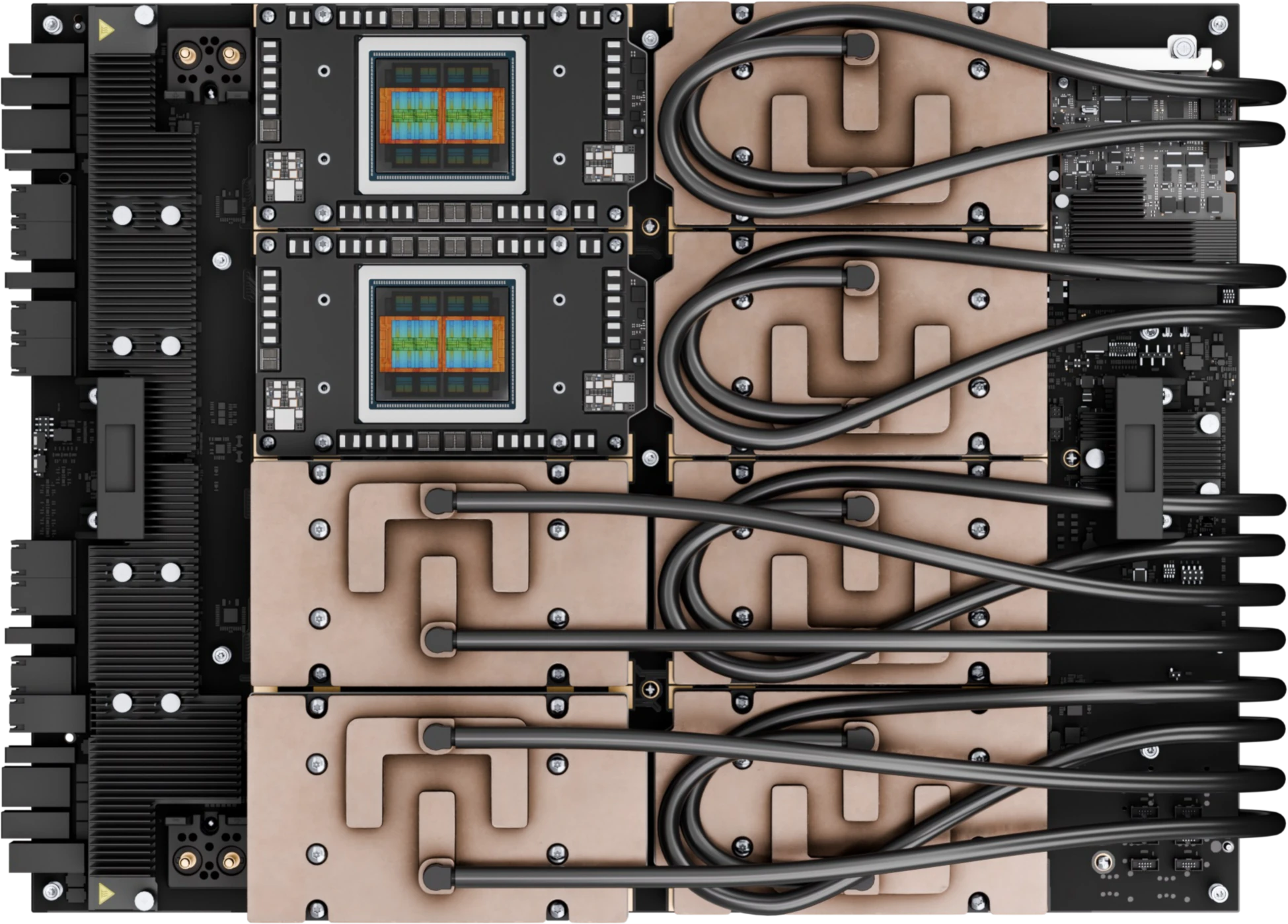

AMD Bares Instinct MI350 GPU, Teases MI400 and MI500

Today AMD officially launched the Instinct MI350, an AI accelerator aiming to compete with Nvidia’s Blackwell, and revealed its roadmap to rack-scale systems. The company also teased the next two Epyc generations and a new Pensando DPU. continue reading

-

Untethered

Untether AI has folded shop, ceasing supply and support for its hardware and software, and AMD is picking up the team, according to the AI accelerator startup. Untether shipped two product generations and delivered superior power efficiency. However, its NPUs had limitations and faced a relentless incumbent, all in the context of a rapidly evolving… continue reading

-

Qualcomm Nabs Alphawave to Bolster Data-Center Strategy

Valuing Alphawave at $2.4 billion, Qualcomm’s takeover signals a deeper push into custom data-center processors, leveraging Alphawave’s high-speed serdes technology and established ASIC business. This move aims to complement Qualcomm’s CPU and AI offerings and capitalize on the growing demand for custom silicon among hyperscalers. continue reading

Popular

Sponsored Content and Plugs

- Ceva Boosts NeuPro-M NPU Throughput and Efficiency

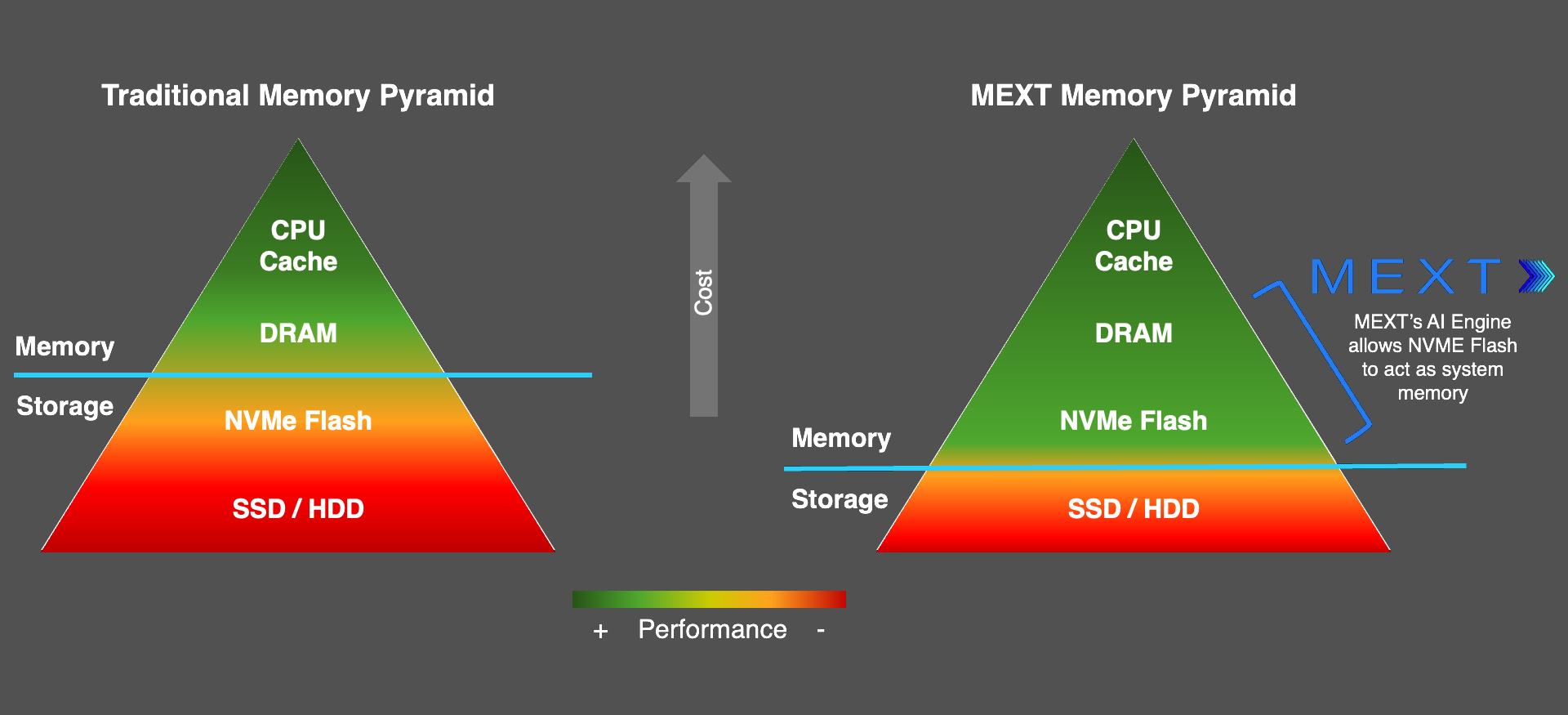

- Slash Server Costs Without Hardware or Software Changes

- MEXT Helps IT Leaders Find the Sweet Spot

- Chips Act Backs Chiplets

- Arteris Expands NoC Offerings for AI Accelerators

Other Sites You Might Like

Read More

AMD Apple APU/IGP Arm auto Broadcom Ceva CPU data center DPU DSP edge AI embedded Enfabrica Epyc FPGA Google GPU Imagination Intel Marvell MCU MediaTek memory Meta Microsoft MLPerf networking NPU (AI accelerator) Nvidia NXP OpenAI PC policy process tech Qualcomm RISC-V SambaNova SiFive smartphone SoftBank software Tenstorrent Tesla Untether